Page Not Found

Page not found. Your pixels are in another canvas.

A list of all the posts and pages found on the site. For you robots out there is an XML version available for digesting as well.

Page not found. Your pixels are in another canvas.

About me

This is a page not in th emain menu

Published:

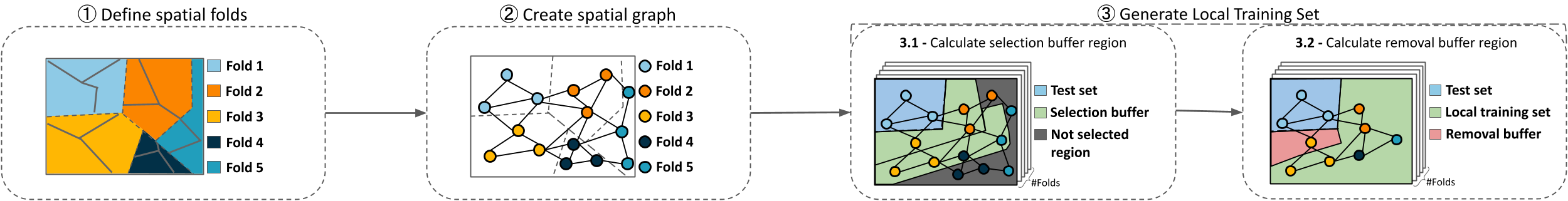

Most recently, there has been an increase discussion towards the use of Spatial Cross-Validation (SCV) techniques to assess models learned from data with spatial dependence. While some authors advocate for always use the SCV, others does not recommend its usage since it can produce pessimistic results. In my point of view, the answer to wether your should or should not use such validation technique lies on your sample distribution, as dicussed in (cite), and how confident you are that the spatial dependence structure observed will be the same on the out of sample data. If I was not clear, imagine a sample dataset S, with a distrbution D presenting a spatial dependence structure. If S is representative enough so D is the same for the out of sample data. Them the traditional Cross-Validation (CV) will not overstimate the generalized error. On the other hand, if you do not have the confidence that the sample distribution D will be observed in the out of sample data, or you know that your sample is biased (e.g, you collected data from spatial clustered areas), then SCV is a better option than the traditional CV. However, you need to keep in mind that SCV will evaluate your model on the worst scenario, when the test set dependence structure is not the same as in the training set. In this way, the best models will be those that can generalize independently of the spatial data structure. In summary, the SCV is not a final validation technique for data with spatial dependence, but it is theoretically stablished method to evaluate your models on the worst scenario situations.

Although cross-validation is a widely used procedure for assessing machine learning models, it can leads to optimistic results when evaluating data with spatial dependence. The spatial dependence structure observed in the sample dataset may not be present on the out of sample data. In this way, the model error will be biased and no reflect the error on the entire population. Alternatives to deal with spatial dependence in model validation fall into a worst scenario situation, where the objective is to asses the model when the training data does not present the same spatial dependence as in the test. Thus, the models selected will be those that can best learn indepently of spatial dependence structure. However, to create this scenarios is not an easy task. We need to remove training data to ensure independence between test and training data without increase the probability of overfitting. This project addresses these issues by proposing a graph-based spatial cross-validation approach to assess models learned from spatially contextualized datasets.

Published in BRACIS, 2017

T. P. da Silva, G. A. Urban, P. De Abreu Lopes and H. de Arruda Camargo, “A Fuzzy Variant for On-Demand Data Stream Classification,” 2017 Brazilian Conference on Intelligent Systems (BRACIS), 2017, pp. 67-72, doi: 10.1109/BRACIS.2017.60.

Published in FUZZ-IEEE, 2018

T. P. da Silva, L. Schick, P. de Abreu Lopes and H. de Arruda Camargo, “A Fuzzy Multiclass Novelty Detector for Data Streams,” 2018 IEEE International Conference on Fuzzy Systems (FUZZ-IEEE), 2018, pp. 1-8, doi: 10.1109/FUZZ-IEEE.2018.8491545.

Published in BRACIS, 2018

V. Souza, T. Pinho and G. Batista, “Evaluating Stream Classifiers with Delayed Labels Information,” 2018 7th Brazilian Conference on Intelligent Systems (BRACIS), 2018, pp. 408-413, doi: 10.1109/BRACIS.2018.00077.

Published in CIARP, 2018

da Silva, T. P., Souza, V. M. A., Batista, G. E. A. P. A., & de Arruda Camargo, H. (2018, November). A fuzzy classifier for data streams with infinitely delayed labels. In Iberoamerican Congress on Pattern Recognition (pp. 287-295). Springer, Cham.

Published in FUZZ-IEEE, 2020

T. P. da Silva and H. de Arruda Camargo, “Possibilistic Approach For Novelty Detection In Data Streams,” 2020 IEEE International Conference on Fuzzy Systems (FUZZ-IEEE), 2020, pp. 1-8, doi: 10.1109/FUZZ48607.2020.9177582.

Published in BRACIS, 2020

Cristiani, A. L., da Silva, T. P., & de Arruda Camargo, H. (2020, October). A Fuzzy Approach for Classification and Novelty Detection in Data Streams Under Intermediate Latency. In Brazilian Conference on Intelligent Systems (pp. 171-186). Springer, Cham.

Published in KDMILE, 2020

Jacintho, L. H. M., da Silva, T. P., Parmezan, A. R. S., & Batista, G. E. A. P. A. (2020, October). Brazilian Presidential Elections: Analysing Voting Patterns in Time and Space Using a Simple Data Science Pipeline. In Anais do VIII Symposium on Knowledge Discovery, Mining and Learning (pp. 217-224). SBC.

Published in JIDM, 2021

Jacintho, L. H. M., da Silva, T. P., Parmezan, A. R. S., & Batista, G. E. A. P. A. (2021). Analyzing Spatio-Temporal Voting Patterns in Brazilian Elections Through a Simple Data Science Pipeline

Published:

This is a description of your talk, which is a markdown files that can be all markdown-ified like any other post. Yay markdown!

Published:

This is a description of your conference proceedings talk, note the different field in type. You can put anything in this field.

Undergraduate course, University 1, Department, 2014

This is a description of a teaching experience. You can use markdown like any other post.

Workshop, University 1, Department, 2015

This is a description of a teaching experience. You can use markdown like any other post.